Apple botched the Apple Intelligence launch, but its long-term strategy is sound

I’ve spent a week with Apple Intelligence—here are the takeaways.

Apple Intelligence includes features like Clean Up, which lets you pick from glowing objects it has recognized to remove them from a photo.

Credit:

Samuel Axon

Ask a few random people about Apple Intelligence and you’ll probably get quite different responses.

One might be excited about the new features. Another could opine that no one asked for this and the company is throwing away its reputation with creatives and artists to chase a fad. Another still might tell you that regardless of the potential value, Apple is simply too late to the game to make a mark.

The release of Apple’s first Apple Intelligence-branded AI tools in iOS 18.1 last week makes all those perspectives understandable.

The first wave of features in Apple’s delayed release shows promise—and some of them may be genuinely useful, especially with further refinement. At the same time, Apple’s approach seems rushed, as if the company is cutting some corners to catch up where some perceive it has fallen behind.

That impatient, unusually undisciplined approach to the rollout could undermine the value proposition of AI tools for many users. Nonetheless, Apple’s strategy might just work out in the long run.

What’s included in “Apple Intelligence”

I’m basing those conclusions on about a week spent with both the public release of iOS 18.1 and the developer beta of iOS 18.2. Between them, the majority of features announced back in June under the “Apple Intelligence” banner are present.

Let’s start with a quick rundown of which Apple Intelligence features are in each release.

iOS 18.1 public release

- Writing Tools

- Proofreading

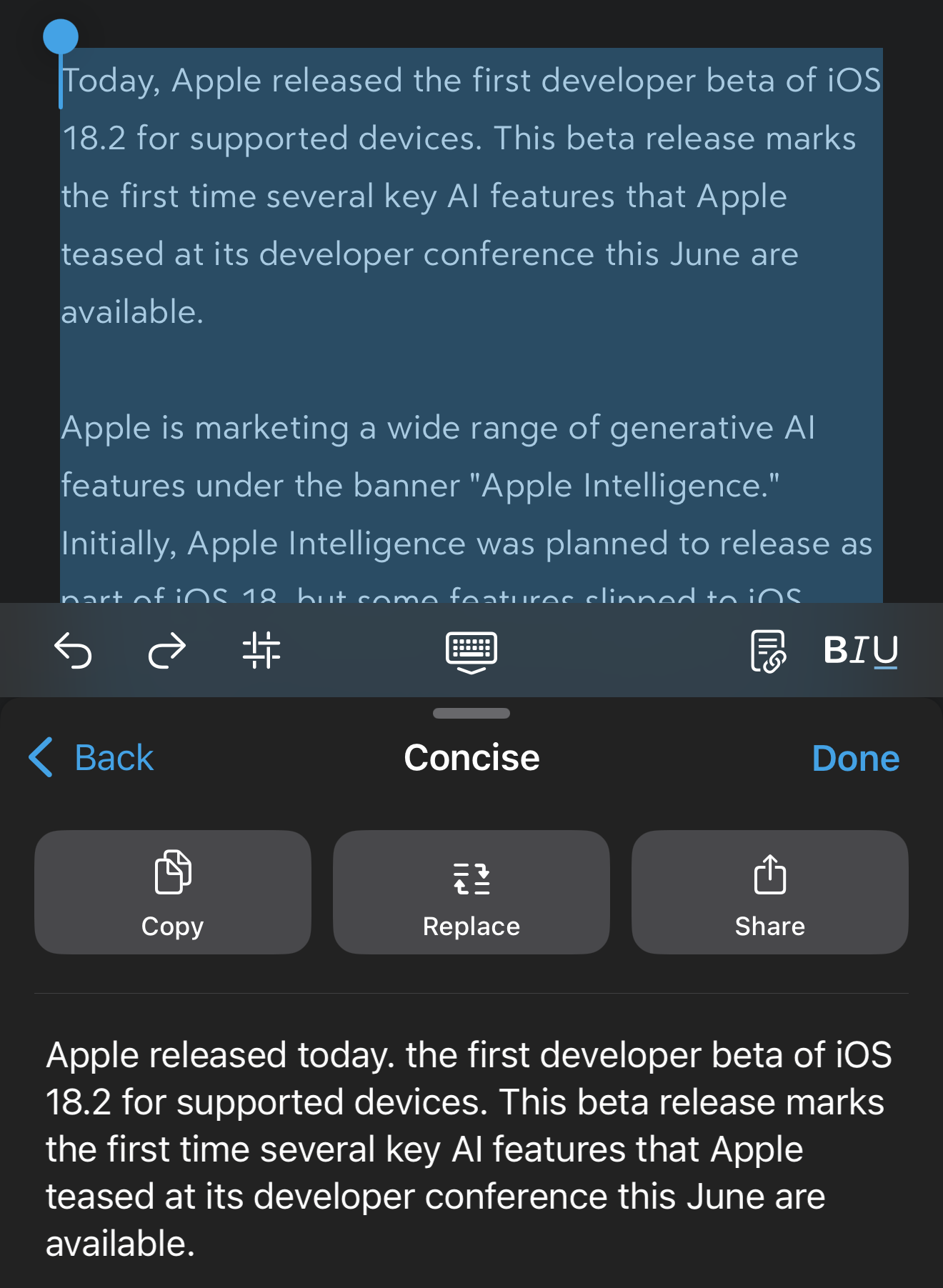

- Rewriting in friendly, professional, or concise voices

- Summaries in prose, key points, bullet point list, or table format

- Text summaries

- Summarize text from Mail messages

- Summarize text from Safari pages

- Notifications

- Reduce Interruptions – Intelligent filtering of notifications to include only ones deemed critical

- Type to Siri

- More conversational Siri

- Photos

- Clean Up (remove an object or person from the image)

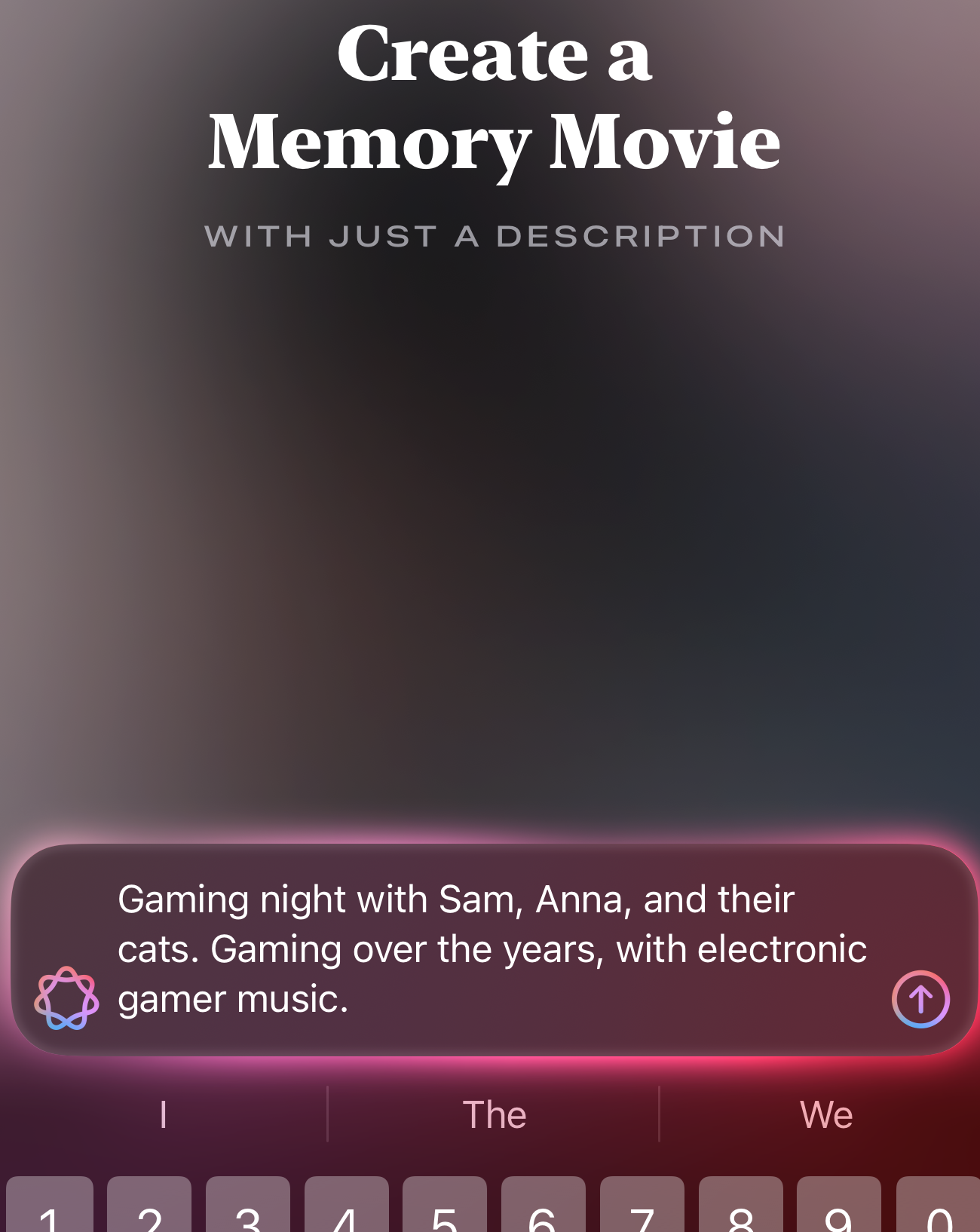

- Generate Memories videos/slideshows from plain language text prompts

- Natural language search

iOS 18.2 developer beta (as of November 5, 2024)

- Image Playground – A prompt-based image generation app akin to something like Dall-E or Midjourney but with a limited range of stylistic possibilities, fewer features, and more guardrails

- Genmoji – Generate original emoji from a prompt

- Image Wand – Similar to Image Playground but simplified within the Notes app

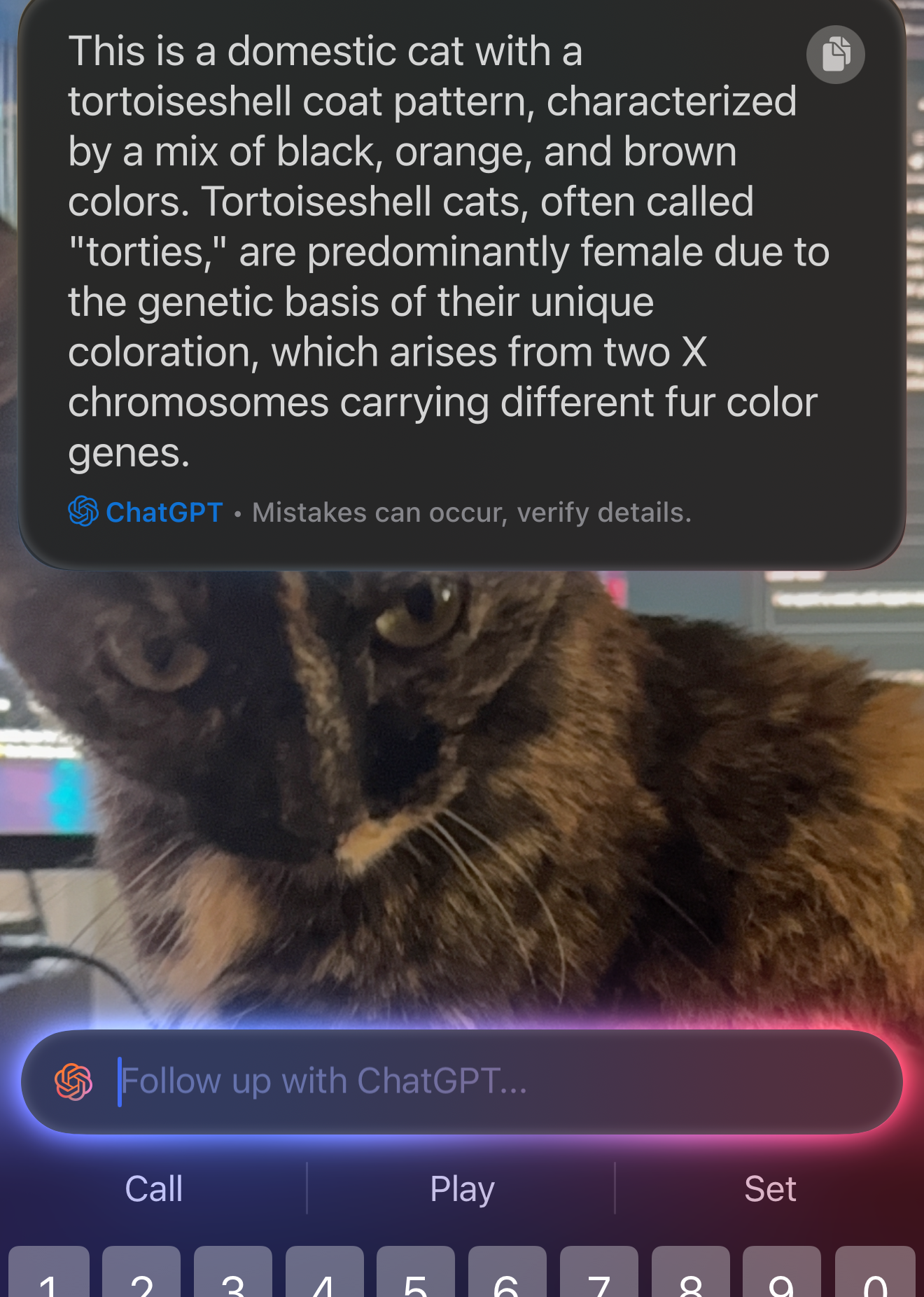

- ChatGPT integration in Siri

- Visual Intelligence – iPhone 16 and iPhone 16 Pro users can use the new Camera Control button to do a variety of tasks based on what’s in the camera’s view, including translation, information about places, and more

- Writing Tools – Expanded with support for prompt-based edits to text

iOS 18.1 is out right now for everybody. iOS 18.2 is scheduled for a public launch sometime in December.

iOS 18.2 will introduce both Visual Intelligence and the ability to chat with ChatGPT via Siri.

Credit:

Samuel Axon

iOS 18.2 will introduce both Visual Intelligence and the ability to chat with ChatGPT via Siri.

Credit:

Samuel Axon

A staggered rollout

For several years, Apple has released most of its major new software features for, say, the iPhone in one big software update in the fall. That timeline has gotten fuzzier in recent years, but the rollout of Apple Intelligence has moved further from that tradition than we’ve ever seen before.

Apple announced iOS 18 at its developer conference in June, suggesting that most if not all of the Apple Intelligence features would launch in that singular update alongside the new iPhones.

Much of the marketing leading up to and surrounding the iPhone 16 launch focused on Apple Intelligence, but in actuality, the iPhone 16 had none of the features under that label when it launched. The first wave hit with iOS 18.1 last week, over a month after the first consumers started getting their hands on iPhone 16 hardware. And even now, these features are in “beta,” and there has been a wait list.

Many of the most exciting Apple Intelligence features still aren’t here, with some planned for iOS 18.2’s launch in December and a few others coming even later. There will likely be a wait list for some of those, too.

The wait list part makes sense—some of these features put demand on cloud servers, and it’s reasonable to stagger the rollout to sidestep potential launch problems.

The rest doesn’t make as much sense. Between the beta label and the staggered features, it seems like Apple is rushing to satisfy expectations about Apple Intelligence before quality and consistency have fallen into place.

Making AI a harder sell

In some cases, this strategy has led to things feeling half-baked. For example, Writing Tools is available system-wide, but it’s a different experience for first-party apps that work with the new Writing Tools API than third-party apps that don’t. The former lets you approve changes piece by piece, but the latter puts you in a take-it-or-leave-it situation with the whole text. The Writing Tools API is coming in iOS 18.2, maintaining that gap for a couple of months, even for third-party apps whose developers would normally want to be on the ball with this.

Further, iOS 18.2 will allow users to tweak Writing Tools rewrites by specifying what they want in a text prompt, but that’s missing in iOS 18.1. Why launch Writing Tools with features missing and user experience inconsistencies when you could just launch the whole suite in December?

That’s just one example, but there are many similar ones. I think there are a couple of possible explanations:

- Apple is trying to satisfy anxious investors and commentators who believe the company is already way too late to the generative AI sector.

- With the original intent to launch it all in the first iOS 18 release, significant resources were spent on Apple Intelligence-focused advertising and marketing around the iPhone 16 in September—and when unexpected problems developing the software features led to a delay for the software launch, it was too late to change the marketing message. Ultimately, the company’s leadership may feel the pressure to make good on that pitch to users as quickly after the iPhone 16 launch as possible, even if it’s piecemeal.

I’m not sure which it is, but in either case, I don’t believe it was the right play.

So many consumers have their defenses up about AI features already, in part because other companies like Microsoft or Google rushed theirs to market without really thinking things through (or caring, if they had) and also because more and more people are naturally suspicious of whatever is labeled the next great thing in Silicon Valley (remember NFTs?). Apple had an opportunity to set itself apart in consumers’ perceptions about AI, but at least right now, that opportunity has been squandered.

Now, I’m not an AI doubter. I think these features and others can be useful, and I already use similar ones every day. I also commend Apple for allowing users to control whether these AI features are enabled at all, which should make AI skeptics more comfortable.

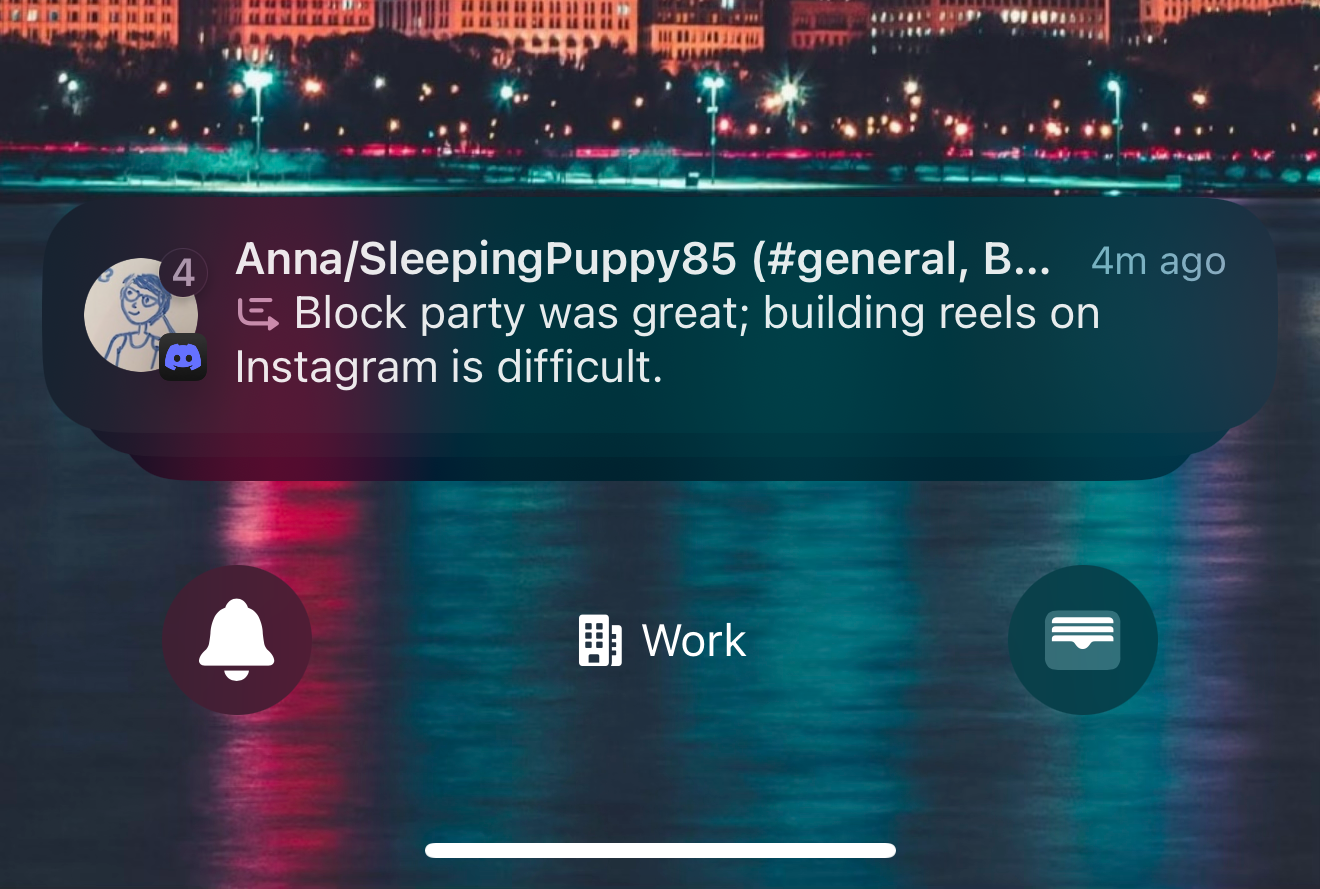

Notification summaries condense all the notifications from a single app into one or two lines, like with this lengthy Discord conversation here. Results are hit or miss.

Credit:

Samuel Axon

Notification summaries condense all the notifications from a single app into one or two lines, like with this lengthy Discord conversation here. Results are hit or miss.

Credit:

Samuel Axon

That said, releasing half-finished bits and pieces of Apple Intelligence doesn’t fit the company’s framing of it as a singular, branded product, and it doesn’t do a lot to handle objections from users who are already assuming AI tools will be nonsense.

There’s so much confusion about AI that it makes sense to let those who are skeptical move at their own pace, and it also makes sense to sell them on the idea with fully baked implementations.

Apple still has a more sensible approach than most

Despite all this, I like the philosophy behind how Apple has thought about implementing its AI tools, even if the rollout has been a mess. It’s fundamentally distinct from what we’re seeing from a company like Microsoft, which seems hell-bent on putting AI chatbots everywhere it can to see which real-world use cases emerge organically.

There is no true, ChatGPT-like LLM chatbot in iOS 18.1. Technically, there’s one in iOS 18.2, but only because you can tell Siri to refer you to ChatGPT on a case-by-case basis.

Instead, Apple has introduced specific generative AI features peppered throughout the operating system meant to explicitly solve narrow user problems. Sure, they’re all built on models that have resemblances to the ones that power Claude or Midjourney, but they’re not built around this idea that you start up a chat dialogue with an LLM or an image generator and it’s up to you to find a way to make it useful for you.

The practical application of most of these features is clear, provided they end up working well (more on that shortly). As a professional writer, it’s easy for me to dismiss Writing Tools as unnecessary—but obviously, not everyone is a professional writer, or even a decent one. For example, I’ve long held that one of the most positive applications of large language models is their ability to let non-native speakers clean up their writing to make it meet native speakers’ standards. In theory, Apple’s Writing Tools can do that.

Apple Intelligence features augment or add additional flexibility or power to existing use cases across the OS, like this new way to generate photo memory movies via text prompt.

Credit:

Samuel Axon

Apple Intelligence features augment or add additional flexibility or power to existing use cases across the OS, like this new way to generate photo memory movies via text prompt.

Credit:

Samuel Axon

I have no doubt that Genmoji will be popular—who doesn’t love a bit of fun in group texts with friends? And many months before iOS 18.1, I was already dropping senselessly gargantuan corporate email threads into ChatGPT and asking for quick summaries.

Apple is approaching AI in a user-centric way that stands in stark contrast to almost every other major player rolling out AI tools. Generative AI is an evolution from machine learning, which is something Apple has been using for everything from iPad screen palm rejection to autocorrect for a while now—to great effect, as we discussed in my interview with Apple AI chief John Giannandrea a few years ago. Apple just never wrapped it in a bow and called it AI until now.

But there was no good reason to rush these features out or to even brand them as “Apple Intelligence” and make a fuss about it. They’re natural extensions of what Apple was already doing. Since they’ve been rushed out the door with a spotlight shining on them, Apple’s AI ambitions have a rockier road ahead than the company might have hoped.

It could take a year or two for this all to come together

Using iOS 18.1, it’s clear that Apple’s large language models are not as effective or reliable as Claude or ChatGPT. It takes time to train models like these, and it looks like Apple started late.

Based on my hours spent with both Apple Intelligence and more established tools from cutting-edge AI companies, I feel the other models crossed a usefulness and reliability threshold a year or so ago. When ChatGPT first launched, it was more of a curiosity than a powerful tool. Now it’s a powerful tool, but that’s a relatively recent development.

In my time with Writing Tools and Notification Summaries in particular, Apple’s models subjectively appear to be around where ChatGPT or Claude were 18 months ago. Notification Summaries almost always miss crucial context in my experience. Writing Tools introduce errors where none existed before.

It’s not hard to spot the huge error that Writing Tools introduced here. This happens all the time when I use it.

Credit:

Samuel Axon

It’s not hard to spot the huge error that Writing Tools introduced here. This happens all the time when I use it.

Credit:

Samuel Axon

More mature models do these things, too, but at a much lower frequency. Unfortunately, Apple Intelligence isn’t far enough along to be broadly useful.

That said, I’m excited to see where Apple Intelligence will be in 24 months. I think the company is on the right track by using AI to target specific user needs rather than just putting a chatbot out there and letting people figure it out. It’s a much better approach than what we see with Microsoft’s Copilot. If Apple’s models cross that previously mentioned threshold of utility—and it’s only a matter of time before they do—the future of AI tools on Apple platforms could be great.

It’s just a shame that Apple didn’t seem to have the confidence to ignore the zeitgeisty commentators and roll out these features when they’re complete and ready, with messaging focusing on user problems instead of “hey, we’re taking AI seriously too.”

Most users don’t care if you’re taking AI seriously, but they do care if the tools you introduce can make their day-to-day lives better. I think they can—it will just take some patience. Users can be patient, but can Apple? It seems not.

Even so, there’s a real possibility that these early pains will be forgotten before long.

Samuel Axon is a senior editor at Ars Technica. He covers Apple, software development, gaming, AI, entertainment, and mixed reality. He has been writing about gaming and technology for nearly two decades at Engadget, PC World, Mashable, Vice, Polygon, Wired, and others. He previously ran a marketing and PR agency in the gaming industry, led editorial for the TV network CBS, and worked on social media marketing strategy for Samsung Mobile at the creative agency SPCSHP. He also is an independent software and game developer for iOS, Windows, and other platforms, and he is a graduate of DePaul University, where he studied interactive media and software development.